Over the past six months, we have been working hard to integrate an AI agent into the Workflow Orchestrator (WFO). This software, which SURF is actively developing in collaboration with other international parties, has been enhanced with RAG functionality over the past six months. This makes it possible to search the orchestrator’s database in a comprehensive manner and to extract all kinds of cross-sectional information from the orchestrator using natural language.

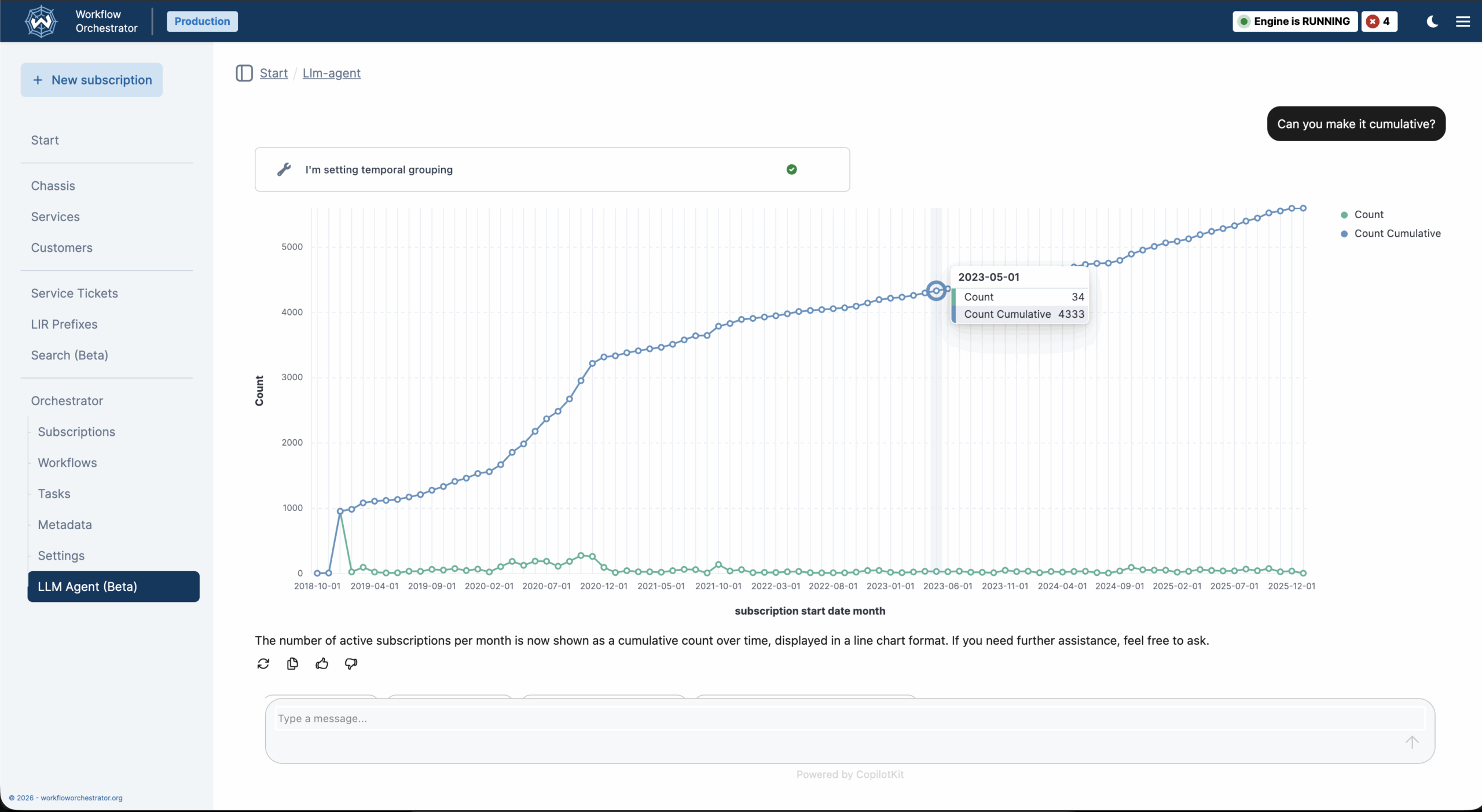

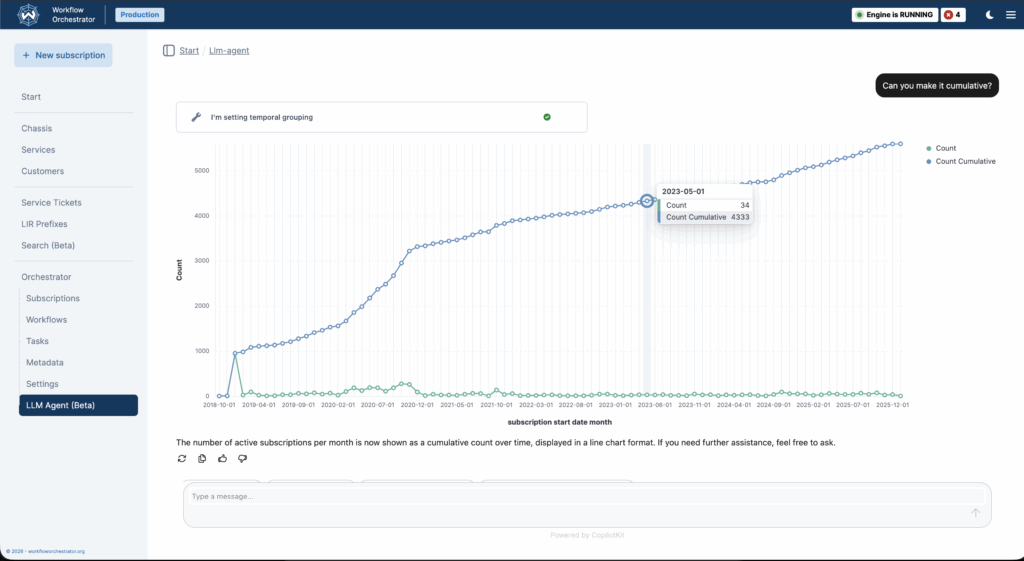

The diagram below shows an example of what this looks like in the SURF installation of the WFO software. The image shows the growth in the number of services delivered on the SURF network using the orchestrator since 2018.

Open source and vendor agnostic

An important criterion in developing this LLM integration was the ability to integrate with different LLMs and not be dependent on a cloud service. To make this possible, we ensured during development that the implementation uses the latest standards. This makes it possible to integrate with an LLM of your choice, as long as it uses the OpenAI API specification. During development, we experimented with several LLMs, including those available on SURF’s AI-HUB platform. This platform, developed for research and education in the Netherlands, makes open-source LLMs available that are hosted in SURF’s data centre.

What next?

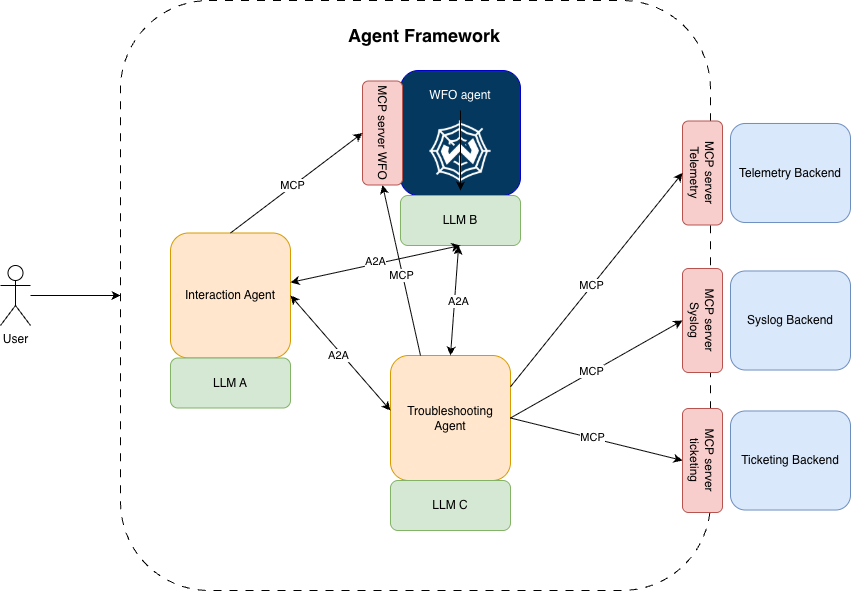

In the coming year, we want to continue building an Agentic ecosystem within the SURF network department. Using machine learning and generative AI, we will try to automate troubleshooting as much as possible so that we can respond (and perhaps anticipate) incidents occurring in the network as quickly as possible.

Would you like to know more?

Be sure to read more on the blog of Tim Frölich, software developer employed by ShopVirge, who did much of the work to build this integration. Click here for a detailed technical explanation.